Plans of Reason: Strawberry Fields Forever?

How advanced is OpenAI's latest reasoning model o1-preview, codename 'Strawberry'?

A. Introduction

On 12 September 2024 OpenAI released its new model, the o1-preview, also dubbed ‘Strawberry’. The company now synonymous with the cutting edge of AI describes its o1 model as the first of

“a new series of AI models designed to spend more time thinking before they respond[, so that t]hey can reason through complex tasks and solve harder problems than previous models in science, coding, and math.”

This has stirred excitement but also scepticism in the AI community, where some herald the o1 as the first in a new class of true reasoning models, and others dismiss it as a contraption devoid of any practical usability, because it is way too eager to ‘figure things out’ but essentially ‘fails to listen’.

Research has immediately taken to stress-testing o1’s performance on the tasks that LLMs often struggle with the most and that o1 is touted to have mastered: reasoning and planning. PlanBench, first introduced in 2022, is an extensible benchmark created to evaluate exactly this capability in AI LLMs.

If you have read Guaranteed Dissent in the past, you should have a sense that I am sceptical of putting a lot of emphasis on too many benchmarks. Yet they do exist and at the very least they can serve as an upper bound of what models can or cannot do.

Historically, PlanBench has been a significant challenge for LLMs, underlining their inability to generalise or problem-solve over multiple steps. With o1 positioned as a reasoning-centric model, the question now is: will it be as influential on AI planning as the 1967 Beatles song eponymous of this post was for the then emerging psychedelic music genre and the medium of music video?

B. How Does PlanBench work?

Planning steps of any kind involve at the very least 2 critical steps: ‘initial plan generation’ and ‘cost optimal planning’. One has to formulate a plan to begin with and evaluate whether it serves as the shortest route to the identified goal achievement. Though there are other, auxiliary tasks that feed into planning, such as robustness of goal reformulation, reusability of plans, replanning and plan generalisation, the evaluation here focuses on the 2 initial aspects only. For this PlanBench relies on Blocksworld.

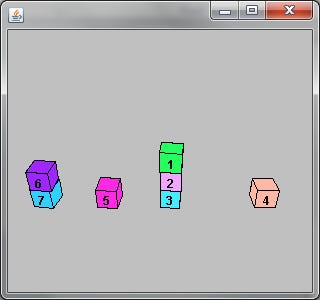

Blocksworld

Blocksworld is a series of task that involves manipulating blocks to achieve specific configurations. In the classical problem, an AI system is given a set of blocks and a goal configuration (e.g., stacking blocks in a particular order). The AI system must then devise a sequence of actions to reach the goal, which requires understanding the relationships between the blocks and carefully planning each move. Though – much like ARC-AGI – this is a mostly very simple task for humans, PlanBench encapsulates many of the difficulties inherent in reasoning: non-trivial planning, state-space exploration and adherence to constraints.

The description of the actions look something like this:

Pick up a block

Unstack a block from on top of another block

Put down a block

Stack a block on top of another blockMystery Blocksworld

To increase difficulty and evaluate more abstract reasoning Mystery Blocksworld is a variation that obscures the problem’s structure. The same underlying task is presented, but the terminology or presentation is intentionally altered to prevent models from relying on pattern recognition alone. This forces the AI system to ‘understand’ the problem rather than to rely on memorised solutions. It has proven particularly challenging for LLMs, as they often perform poorly when surface-level patterns are hidden or distorted.

The obfuscated description of the actions in Mystery Blocksworld look something like this:

Attack object

Feast object from another object

Succumb object

Overcome object from another objectC. Previous Best Performance

Historically, the human baseline on PlanBench was around 78% for plan generation and 70% of optimal planning, which only goes to show that the problem isn’t trivial, though the numbers could also be attributed the a relatively small study to establish the baseline – only 50 participants. Until recently LLMs have fared poorly on Blocksworld, hovering around 35%. The only notable exceptions were Anthropic’s Claude 3.5 Sonnet (57.6%) and Meta’s LLaMA 3.1 405B Parameters (62.6%). OpenAI’s best performance sat at 35.5% for GTP-4o, which was actually worse than GPT-4 Turbo (40.1%).

Despite their large parameter count and the vast amounts of training data, LLMs performance on Mystery Blocksworld was across the bench atrocious at less than 5%.

Just for reference, machines can solve the PlanBench tasks with perfection, just not LLMs: Fast Downward, a domain-independent planner that uses a combination of algorithms like forward search, symbolic search and heuristics, which guide the search toward the goal while minimising computational effort. In both Blocksworld and Mystery Blocksworld (both ‘classic’ and ‘randomised’ – see below), Fast Downward achieves quickly and consistently perfect scores at 100% accuracy while providing the solution near instantaneously within ca 0.25 seconds.

D. o1 Performance

OpenAI’s o1 model showed a significant performance boost in these planning benchmarks. In the classical Blocksworld domain, o1 achieved a 97.8% accuracy, far surpassing all previously tested models. On Mystery Blocksworld, where the obfuscated task is designed to throw off pattern-based systems, o1 scored 52.8%. Although still far from perfect, this is a dramatic improvement compared to the sub-5% scores achieved by previous LLMs.

To check whether the exact obfuscation of Myster Blockworlds was learned by the o1 model as part of its training the researchers also generated a randomising Mystery Blocksworld test set, where additional noise and randomness were introduced to generate different obfuscations. While performance did dip further to now only 37.3% this still represents a sharply contrast to the flat zeroes seen when testing previous models.

As a side-note, and follow-up to a previous post, o1 hasn’t proven to be a game changer in solving the ARC-AGI puzzle. It comes in at 21%, in line with Anthropic’s Sonnet 3.5, and ahead of GPT-4o with a mere 9%.

E. How does it seem to work?

OpenAI has been rather tight-lipped about the inner workings of the o1 model. Without providing further details they only explain:

“We trained the[ o1] models to spend more time thinking through problems before they respond, much like a person would. Through training, they learn to refine their thinking process, try different strategies, and recognize their mistakes.”

‘Much like a person would’ is almost certainly a case of marketing speech, but when you use o1 you will notice that it does take significantly longer before responding. I think OpenAI made a sensible UX choice by displaying what is going on while you wait for the system to respond. As a side-note, this was not the case during the first few days after o1’s release and I suspect that OpenAI only realised after how users were getting confused by the surprisingly long wait time thinking their app or web instance had crashed.

Hidden Chain-of-Thought

While details are speculation at this point, while you wait for o1 to respond it is running through a ‘hidden’ Chain-of-Thought (CoT) process. OpenAI took a deliberate decision hiding this CoT process and claims to have done so, to be able to study the model’s thought process and evaluate for user manipulation, though the truth likely rests mostly elsewhere (see below).

Nota bene: CoT is a method used in AI to improve how models reason and solve more complex problems by forcing the model to break tasks down into smaller, logical steps. This scaffolding is meant to prompt the model to explain its thinking process step-by-step, like how a person might show their work when solving a math problem, instead of generating an answer directly. With mixed success this theoretically helps models to better handle tasks that require reasoning across multiple steps sometimes reducing errors.

The system is bolstered by specific reinforcement learning, that steers the creation, curation and final selection of a CoT tracing different reasoning paths, which represent the step-by-step thought processes the model might use to arrive at an answer. As such o1 doesn’t only generate text, but goes a step further than previous models evaluating different paths in the background. It wouldn’t surprise if this process was achieved via a specialised GPT-4o model, conceptually similar to the Critic-GPT, OpenAI showcased earlier this year.

Comparing expected rewards

The additional reinforcement learning (RL) could be part of model pre-training – the first step of model training where RL is not typically applied – and its result could be the memorisation of the q-values from countless examples of step-by-step reasoning paths.

Nota bene: In RL the q-value (or action-value) represents the expected cumulative future reward the model expects when taking a certain action in a given state and following a determined policy thereafter.

By learning q-values for different CoTs, o1 might be able to evaluate which reasoning paths are likely to lead to the best outcomes.

CoT on informal language and at inference

All of this then seems to come to fruition at inference, the process of generating an output, when o1 seems to perform something akin to ‘rollouts’, i.e. where the model simulates the outcomes of different CoT reasoning paths before selecting the most promising one, i.e. the path with the highest expected reward.

An aspect worth underlining is that o1 shows a working example of applying CoT reasoning search to informal language as opposed to formal languages like programming or math. The second half of this story is CoT application during inference, because the harshest critics of LLMs posit that iterated CoT genuinely unlocks greater generalisation skills, where automated iterative re-prompting enables the model to better adapt to novelty. Limitation to a single inference can be hardly more than reapplying memorised programs, but generating intermediate output CoTs, examining those and moving forward is one step closer to unlocking on the fly learning and adaptation.

A lot of this remains conjecture not only at this point, but potentially for a while longer: OpenAI has reportedly threatened to revoke o1 access for users who attempt to probe into the internal reasoning process of its o1-model. This opacity limits external validation and critical understanding of how o1 functions, making it difficult to assess or scaffold in practical applications. At the same time, other companies are relying on OpenAI’s models to train theirs, so one can understand why OpenAI is protective of its youngest family member.

F. Limitations

1. Cost

o1’s advancements in reasoning come at a steep cost – pun intended. In what has been established as the industry standard remuneration model, so far providers charged based on the number of input and output tokens; essentially prompt + (system prompt, if you use one +) answer. Open AI introduces a new metric for o1, the ‘reasoning tokens’, which are generated internally during the reasoning process.

While unseen by users, this noticeably raise the cost of running o1 compared to other models: running 100 instances of PlanBench on GPT-4 – OpenAI’s second most expensive model to use – totals USD 1.80, whereas o1 comes in at USD42.12 or almost 25x the price, making it impractical for widespread adoption without significant financial resources or any ability to control the extent of the reasoning process.

2. Speed

Considering how we believe o1 to work it can’t come as a surprise that where o1 improves in performance, it sacrifices speed. While the speed of execution wasn’t previously considered as part of PlanBench, because the time taken by ‘simple’ LLMs for an output dependent almost entirely on the length of that output, but are otherwise independent of the semantic content or difficulty of the question.

o1 takes on average at least 40 seconds and up to nearly 2 mins to complete Blocksworld problems. For reference, the perfect performance of Fast Downward is obtained within ca. 0.25 seconds or 150 times faster.

Of course we can expect this time window to reduce over time, but for the moment o1’s sluggish performance makes it unsuitable for real-time applications.

3. Accuracy over higher step count

Although o1 excels in smaller, simpler instances of Blocksworld, its performance degrades rapidly as the complexity of the problem increases. For problems involving more than 20 steps, o1 only manages a 23.6% success rate, highlighting its difficulty in scaling reasoning to larger, more intricate tasks. Upward of 30 steps performance goes essentially to 0.

‘Come on, you are being way too critical!’ you think? Well, let’s consider this very simple case of replying to an email that asks for a meeting, giving 2 alternate dates/times as an option. How many steps do you think it would take to complete this task?

Recognise a new email was delivered

Read the email

Determine relevance of meeting request (another question here is: how exactly, but that is a separate topic)

Check availability for time slot 1

Check availability for time slot 2

Determine which one works better (similar: how exactly?)

Compose response with preferred time slot

Send email

Update calendar with meeting details

Monitor for confirmation

I don’t think I am inflating this everyday task and my only point here is: 20+ steps seems like a high number, but in fact even very straight forward tasks require a surprising number of steps to complete. Successfully completing only ca 25% of these kinds of tasks is not anything you’ll likely accept from your AI system.

3. Acknowledging failure

An equally relevant use of planning abilities is to recognise that a desired goal cannot be accomplished by any plan and o1 also struggles with acknowledging this kind of failure. No more than 27% of objectively unsolvable scenarios were identified as such, whereas the model continued to generate impossible and incorrect plans in the remainder of cases.

Nota bene: This is a bit of a simplification, because 19% of these cases were returned as ‘empty plans’, but without an indication or explanation that no solution exists.

5. Gaslighting

A related, but new quality of confabulation is slightly more concerning: o1 seems to have a tendency to generate creative, yet incorrect justifications when it fails. In some instances, the model has already been noted to fabricate explanations that are not grounded in reality, a behaviour almost akin to ‘gaslighting’. For example, o1 might claim that a completion condition was briefly true during the task, and therefore the task should be considered solved, even if the goal was never completed. This behaviour is slightly concerning as it has the potential to further erodes trust in AI, particularly in high-stakes environments where accuracy and integrity are paramount. The pollution of our information ecosystem and loss of consensus reality is one of the 7 domains of AI risk identified in MIT’s AI Risk Repository, a database of over 700 AI risks.

G. Conclusion

OpenAI’s latest release of the o1-model represents a notable leap forward in reasoning and planning tasks, significantly outperforming previous LLMs in benchmarks like PlanBench. However, these advances come with considerable limitations. At more than 20x the cost, maybe 5x the inference time and inconsistent accuracy on complex tasks that require a higher number of reasoning steps likely will prevent o1 from being a truly general-purpose reasoning tool at this time. What I suspect will further prevent wide-spread adoption for the time being is the opaque nature of o1’s inner workings, combined with a new found chutzpah when confabulating. Nonetheless, o1 marks an important step forward and a first working example of Chain-of-Though application at inference with a - seeming - ability to revisit and evaluate previous steps, which orients AI progress toward the right direction of generalisation and adaptation. At the same time the path toward reliable, efficient reasoning models remains a work in progress. A critical conclusion would be that o1 shows no progress - arguably a step backward - on one of GenAI’s most debilitating issues, its inexorable tendency to confabulate. In this sense strawberry fields are unlikely to be forever. But one problem at a time!